Can very high resolution satellite imagery and artificial intelligence modernise wildlife surveys in Africa?

Authors | Sophie Maxwell (CCF) and Leslie Polizoti (Madikwe Future's Company). Published in 2022.

- State of species

- African elephant and rhino populations have declined significantly over the past 40 years, making wildlife surveys essential for conservation planning. Traditional aerial surveys are costly and labour-intensive, prompting interest in high-resolution satellite imagery and AI for large mammal detection.

- The Connected Conservation Foundation led a cross-sector collaboration to test AI-assisted wildlife monitoring using 30 cm satellite imagery donated by the Airbus Foundation.

- The study compared three approaches: visual inspection of imagery using the human eye, open-source AI models trained on synthetic data and a bespoke convolutional neural network (CNN). Field teams in Madikwe Game Reserve and Sera Wildlife Sanctuary recorded on-the-ground sightings during satellite pass-overs to validate AI classification and human detections.

- Environmental factors like midday satellite pass-overs, shadows and heterogeneous landscapes challenged detection accuracy for both AI and human inspection of satellite imagery.

- The algorithm detection and visual human inspection of large animals in 30 cm resolution satellite imagery performed well, yet did not give conservation managers the required level of 90%+ accuracy in individually counting and classification, in comparison to on-the-ground sightings.

- Future AI applications may focus on identifying high-density wildlife areas for human validation rather than direct species classification and counting of individuals.

Objectives

Introduction

The populations of African elephants and White and Black rhinos have fallen across Africa over the last 40 years. Wildlife surveys are essential for monitoring changes in population numbers and the movement of species across landscapes. They provide a valuable source of data to inform critical decisions on how best to conserve threatened species. Traditional survey methods in savannah landscapes use aerial counts completed by humans in a plane, but this can be costly and labour-intensive, with variable results.

In 2022, there was a growing interest in using <1m high-resolution satellite imagery and artificial intelligence (AI) technologies to survey large mammals (over 1m in size) across vast and hard-to-reach areas.

Connected Conservation Foundation led a cross-sector collaboration to explore whether these technologies can provide a practical and feasible method of reducing the time and cost of wildlife surveys in savannah environments. A summary of the study findings can be found in this blog, but you can download the full white paper here.

Figure 1: Elephants detected in Pleiades Neo imagery

Study approach

Through Airbus Foundation archived data and new satellite tasking from the Airbus satellites Pléiades and Pléiades Neo, our study endeavoured to build synthetic training data and test the feasibility of three approaches for the detection and identification of animals in very high-resolution satellite images:

- The human eye only, to find and classify different species in the images

- Computer vision and open-source (pre-trained) neural network models

- A combination of computer vision and a bespoke convolutional neural network model, trained on a set of synthetic animal images

Where possible, animal identifications were then compared with on-the-ground sightings reported by field teams around the time of satellite pass-over.

Additionally, as protected areas in savannah environments typically consist of both heterogenous (complex and non-uniform terrain and flora) and homogenous landscapes, (open areas with less background variation, i.e. around watering holes), our study sought to detect and classify large animals across both environments.

Methodology

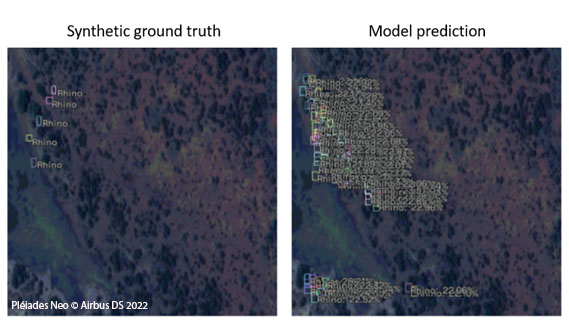

For key taskings, field teams were tactfully positioned in the field to count sightings on the ground. Two areas in Madikwe were selected, targeting known areas of wildlife density, totalling 200 km2 of Madikwe Game Reserve. The capture was scheduled for 10:33 am South African Standard Time (SAST) on 2 March 2022, at an incident angle <15°. At the same time, three teams collected field data on wildlife positions between 10:30 am and 12:30 pm.

A similar process was taken for Sera Wildlife Sanctuary in collaboration with Northern Rangelands Trust’s GIS and Ranger teams. 100km2 of 30 cm Pleiades Neo imagery was captured, but only a smaller number of target species were sighted at the time (reasons outlined in the report) so the focus concentrated on Madikwe.

Field teams took different routes through Madikwe’s tasked areas (shown below), specifically near water points where wildlife (particularly elephants and rhinos) are frequently seen.

Figure 2: Planned field team routes, Madikwe Game Reserve (map data: Google, Airbus/ Maxar Technologies)

During this tasking, 57 animals were identified by field teams around the time of the satellite pass-over. These were plotted by location with grid references to an area approximately 500m x 500m, or where the vehicle spotting the animals was likely positioned when identification was made (see below).

Figure 3: Plotted field sightings locations, Madikwe Game Reserve

Most of the relevant sightings were recorded between 10:44 and 11:40 am. Calculating the maximum speed of animal travel (kph), these animals were expected to be well within estimated km2 ranges around pins at 10:33 am (when the satellite passed), so are expected to be seen on the satellite imagery.

By sending out field conservationists at the time of capture across partner reserves, for some pins, the cloud-free 30 cm data offered the opportunity to compare direct elephant detections, between in-field sightings and human eyeballing on 30 cm satellite imagery, alongside AI classifications.

The following three approaches were explored and compared with this ground-truthed data:

Approach 1: The human eye only, to find and classify different species based on field-based expertise and insight

Madikwe's conservation experts with substantial local field experience manually scanned the satellite imagery with the human eye and identified 110 large mammals across the 200 km2 Area of Interest (AOI). Conservation experts used their in-depth knowledge of the reserve and species behaviours in this landscape to record their understanding and classification of the sightings.

The below images were taken at a 1:100 scale from the 30 cm satellite image captured at the same time as the plotted sightings for the first satellite image above. Using field data, it was concluded that the bottom left image contains only rhinos, and the animals in the rest of the images are elephants. This example indicates how difficult it is to differentiate between rhinos and elephants in 30 cm imagery taken close to the nadir line (the point on the Earth’s surface directly below the satellite).

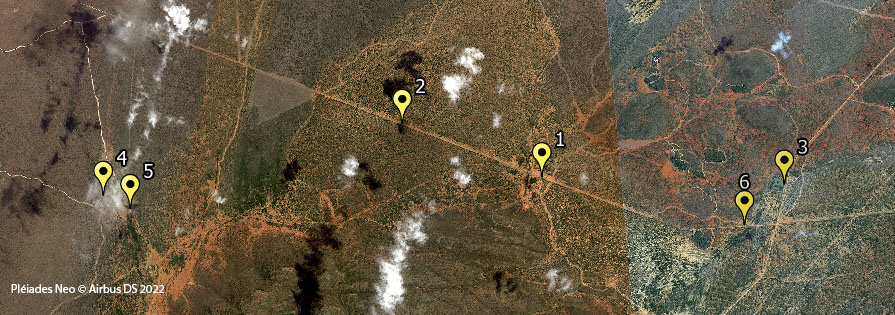

Approach 2: Computer vision and open-source (pre-trained) neural network models, trained on a set of synthetic animal images taken from drones and down-sized

Dimension Data Engineers identified three state-of-the-art object detector models as being best suited for rhino classification: RetinaNet (using the ResNet50 backbone), EfficientDet (EfficientNet backbone) and YOLOv5 (using the CSPDarkNet and a PANet neck).

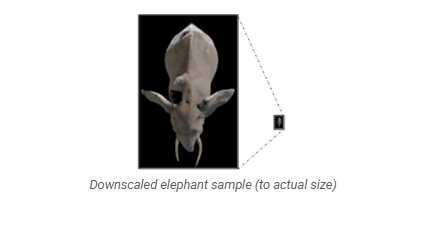

For the purposes of rhino detection, no satellite training data was available for these endangered species. Object detection approaches require an incredibly large amount of training data to ensure that the model can reasonably learn differentiating features. Therefore, this approach developed a synthetic training data set. This involved cropping rhino images taken from varying resolution drone and aerial imagery into bounding boxes, then downsized to the resolution of the satellite sub-image in the training set, (10,000 training images across approaches).

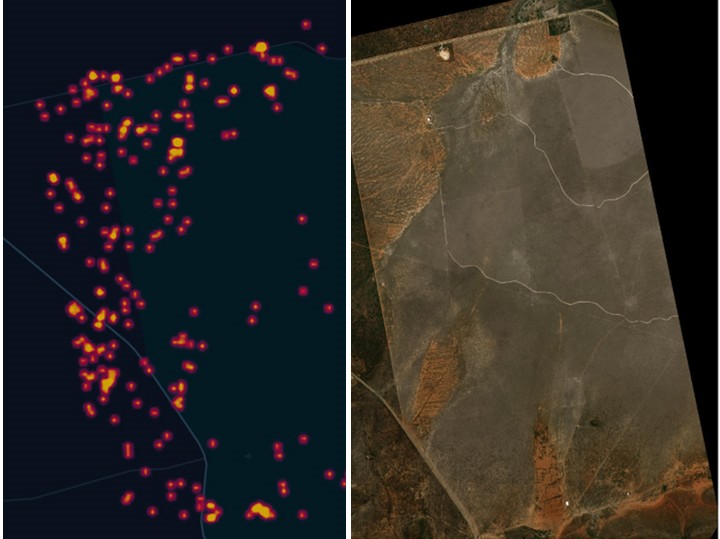

When running these trained models on 30 cm satellite data of Madikwe Game Reserve, the accuracy of results for 30 cm was unsatisfactory due to the high number of false positives provided by the models. However, based on the spread of false positives compared to the synthetic ground truth, these off-the-shelf models could potentially provide a heatmap ‘where to look’ approach to potential detections. This may be a useful option for conservation managers. (see below on candidate for future exploration).

Approach 3: A combination of computer vision and a bespoke convolutional neural network model, trained on a set of synthetic 3D animal images

For this approach, Dimension Data Engineers used open-sourced computer-generated imagery of the animals to create a synthetic data set to train a convolution neural network (CNN) model for animal classification. Samples are seen in 3D top-down, representing the outline and size of the animals, as seen from the nadir line. The images were downscaled to match the satellite imagery resolution and stitched into the satellite imagery.

Challenges

Heterogeneous vs homogeneous environments

The complexity of dealing with heterogeneous environments opposes typical image processing methodologies. Complexity in an image is synonymous with ‘noise’ or random variation of brightness or colour, making it extremely difficult to isolate one region of interest from the background.

Time of satellite pass-over

The orbit timings for the Pléiades Neo constellation of satellites were a major constraint for performing wildlife surveys in Kenya. Capture times for geographies nearer the equator fall around 11.30-12.30 pm local time in the heat of midday. African wildlife often ventures out into the open in the early morning to feed and drink, then retreat to the shade to avoid the heat of the midday sun. In our Kenyan study site, animals were often concealed by vegetation at the time of satellite acquisition. The project focus moved to the lower latitude in South Africa and Madikwe Game Reserve, where earlier tasking allowed for better conditions for wildlife sightings and approach testing.

Shadows

Shadows in remotely sensed imagery are produced by different objects, namely clouds, trees, boulders and mountains. These shadows have the potential to impact the accuracy of information extraction. For example, when elephants are bunched together, shadows and blobs can merge and field teams get different results from humans eyeballing the imagery, ground-truthed data and AI. In this 30 cm image, taken at a Dam in Madikwe, a group of elephants are under the trees with shadows. It is difficult to accurately count how many are in the yellow box. Human detection of this image identified five elephants, but this was debated by the field team, who sighted only four in the area.

Additionally, when satellite images are captured at a higher incident angle, the shadows of the animal are more pronounced and play a more significant part in classification predictions. It is extremely challenging to provide enough synthetic training data for all shadow possibilities for each species. This project only focused on images captured close to the nadir line. Higher incident angles could be a future area of research.

Cloud cover

When surveying such large areas, there is a high probability that some clouds will obscure important sightings, even on a largely cloud-free day.

The Madikwe captures were tasked on optimal days, with excellent visibility and clear skies, with the image recording under 5% cloud cover. For a critical tasking, of the 57 sightings captured by on-the-ground teams during pass-over, one field-ranger sighting identified two rhinos at a known location. However, this sighting was concealed by the only cloud in the sky that morning. If this had been an annual wildlife survey, these two crucial sightings of a critically endangered species would have been missed.

Results

The practical study found that, although some results showed promise, significant challenges remain in using satellite imagery to survey species in savannah situations. The algorithm detection and visual human inspection of large animals in 30 cm resolution satellite imagery (incident angle <15°), did not give conservation managers the required level of accuracy in individually counting and classifying species, in comparison to on-the-ground sightings.

Principle research findings included:

Both human inspection of this imagery and AI model analysis could not discern between different species of large mammals in certain situations, including:

- When different species are interspersed together in the same area (elephants/rhinos, wildebeest/buffalo). For example, near watering holes.

- When juvenile species are mixing with other adult species. For example, the difference between a juvenile elephant and an adult buffalo or rhino.

- Additionally, when species such as elephants bunch close together, the shadows of several animals merge into one, resembling a ‘blob’, and it is difficult to count exact numbers.

- Cloud cover is a barrier to this survey method. During the study, on-the-ground field teams made many wildlife sightings at the approximate time of the satellite pass-over. However, even on a 96% cloud-free day, vital ground sightings of Black rhinos were obscured by the only small cloud in the region. This resulted in priority sightings not being identified in satellite imagery, which was unsatisfactory to conservation managers.

- Lastly for the AI, there was a lack of animal sightings in the imagery to function as labelled training data. Instead, novel approaches to creating synthetic training data were explored. 3D imagery, and downsized drone imagery, as a representative of real sightings, were used to train the algorithms, with limited success.

Ongoing research is needed to explore new species and situations where 30 cm satellite imagery can be of value. Specifically in homogenous landscapes or seascapes, where the objects of interest have a strong contrast to stand out from their background environment. Use cases could include monitoring colonies or herds of animals in hard-to-reach environments, where greater variability in counting accuracy can be tolerated and mixing between species groups is limited.

Only when aerial drone technologies enable image resolution beyond 30 cm to < 20 cm should these methods be pursued as a viable individual-wildlife-counting technique for mixed large mammals, in mixed (heterogeneous /homogeneous) savannah environments.

A potential use-case for AI monitoring of wildlife in satellite imagery

One notable outcome of this study is that rather than AI providing satisfactory individual counting and locations of animals, the techniques produced a heatmap style of potential sightings, acting as an indicator of ‘where to look’ for human-eyeballing validation within an image (see below). Scanning satellite imagery with the human eye is incredibly time-consuming and requires exceptional diligence, so consulting with an AI model to highlight areas of potential detection could be valuable. Field teams believe this may be useful for certain use cases, especially when locating (rather than individual animal counting) hard-to-find colonies or herds of certain species in remote areas.

Conclusion

This study demonstrates both the promise and limitations of using high-resolution satellite imagery and artificial intelligence for wildlife surveys. While AI models and human detection from satellite images showed potential for broad-scale species identification, neither approach consistently matched the accuracy of traditional aerial survey methods. Heterogeneous landscapes, shadow interference and satellite pass-over timing all contributed to variability in detection rates.

However, AI-assisted analysis showed promise in generating heat maps of potential wildlife locations, providing a valuable tool for conservationists to focus human-led validation efforts. While current satellite resolutions (30 cm) limit individual species identification with confidence, future advancements, such as <20 cm resolution imaging, may enhance the viability of these methods for large-mammal monitoring. Moving forward, targeted applications in homogenous environments and hard-to-reach landscapes could maximise the value of satellite-based monitoring.

Next steps

Building on this work, collaborators now seek to identify the species and situations around the world where 50 cm and 30 cm resolution satellite imagery can be of value, especially in homogenous, hard-to-reach environments, where greater variability in accuracy can be tolerated.

In response, The Airbus Foundation and the Connected Conservation Foundation launched the Satellites for Biodiversity Award, which is now in its third round. The new grant scheme, launched in 2022 aims to accelerate the use of high-resolution satellite imagery for biodiversity conservation. Projects in Namibia, Thailand, Papua New Guinea, Kenya, Ethiopia, Nepal, Peru and South Sudan have received satellite data and funding to enhance global conservation efforts. Results from grantees' work will be showcased in this Satellite Hub to support conservation organisations by helping guide the effective use of high-resolution (HR) satellite imagery and Artificial Intelligence (AI) to accelerate biodiversity and ecosystem monitoring and protection.

Collaboration and acknowledgements

At Connected Conservation Foundation, we are proud to have led an innovative study into the feasibility of using artificial intelligence (AI) techniques and satellite images in wildlife surveys. This study would not have been possible without the collaborative resources and skills of our partnerships, and we are truly thankful.

Our Partners

We are grateful for the generous donation of satellite imagery from Pléiades Neo, the new 30 cm-resolution optical constellation, from the Airbus Foundation. Furthermore, Microsoft funded and provided us with computational resources through the AI for Earth programme.

We would also like to thank our other partners for playing a valuable role in this study:

- Dimension Data Applications

- NTT Ltd

- Northern Rangelands Trust (NRT)

- Madikwe Futures Company NPC (Madikwe)

Madikwe Futures Company

Project Leads

Leslie Polizoti and Koos Potgieter

Madikwe Future's Company is a first-in-kind conservation model in Madikwe Game Reserve, helping uplift local communities, protect Madikwe’s wildlife and save rhinos for the world.

The Northern Rangelands Trust (NRT)

Project Leads

Kieran Avery, Samson Kuraru, Kasaine Letiktik and Antony Wandera

The Northern Rangelands Trust (NRT) is a membership organisation owned and led by the 45 community conservancies it serves in Kenya and Uganda. NRT was established as a shared resource to help build and develop community conservancies, which are best positioned to enhance people’s lives, build peace and conserve the natural environment.